AutoEncoders in Keras: Conditional VAE

In the last part, we met variational autoencoders (VAE), implemented one on keras, and also understood how to generate images using it. The resulting model, however, had some drawbacks:

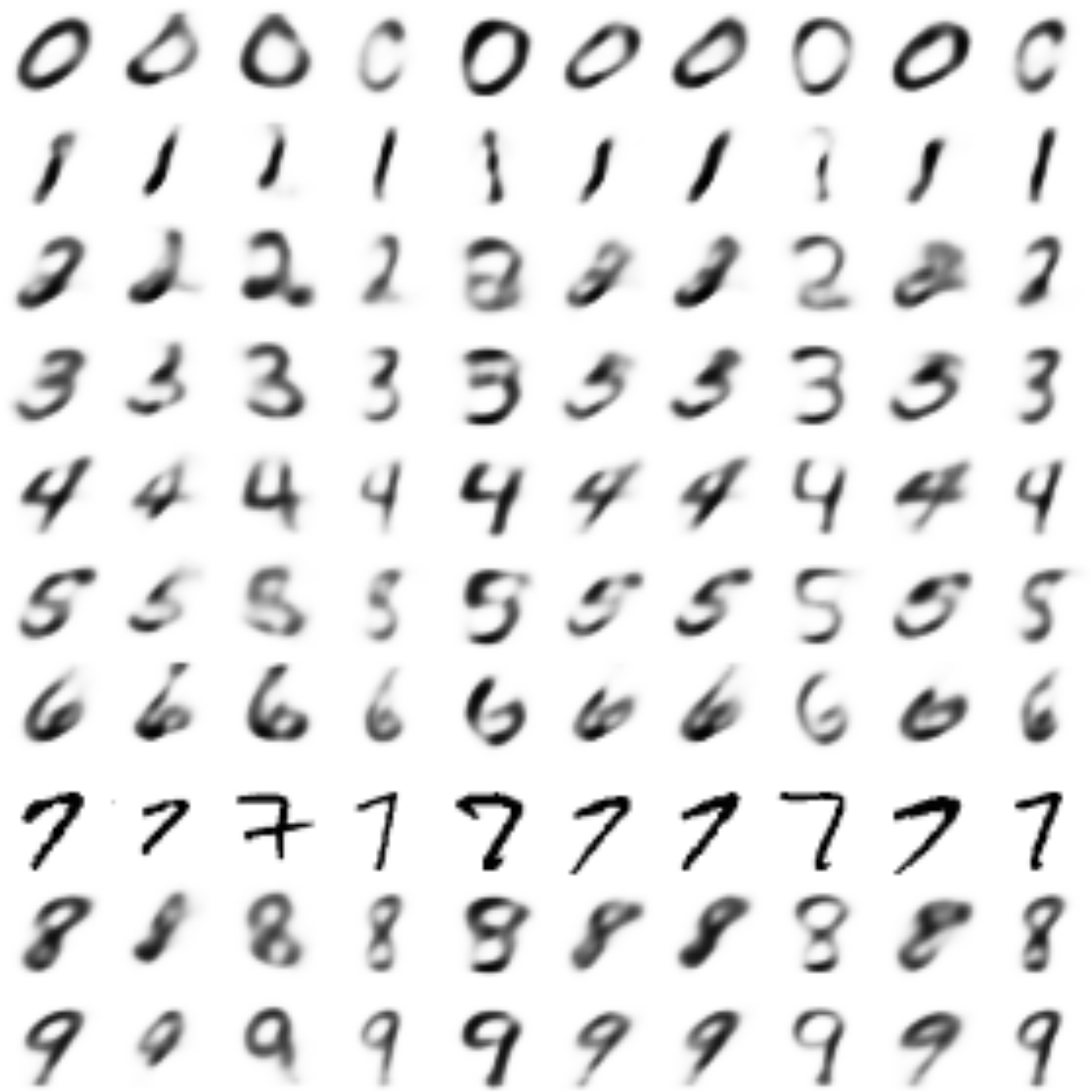

Not all the numbers turned out to be well encoded in the latent space: some of the numbers were either completely absent or were very blurry. In between the areas in which the variants of the same number were concentrated, there were generally some meaningless hieroglyphs.

It was difficult to generate a picture of a given digit. To do this, one had to look into what area of the latent space the images of a specific digit fell into, and to sample it from somewhere there, and even more so it was difficult to generate a digit in some given style.

In this part, we will see how only by slightly complicating the model to overcome both these problems, and at the same time we will be able to generate pictures of new numbers in the style of another digit - this is probably the most interesting feature of the future model.

Full Russian text is available here